Azure Integration Services

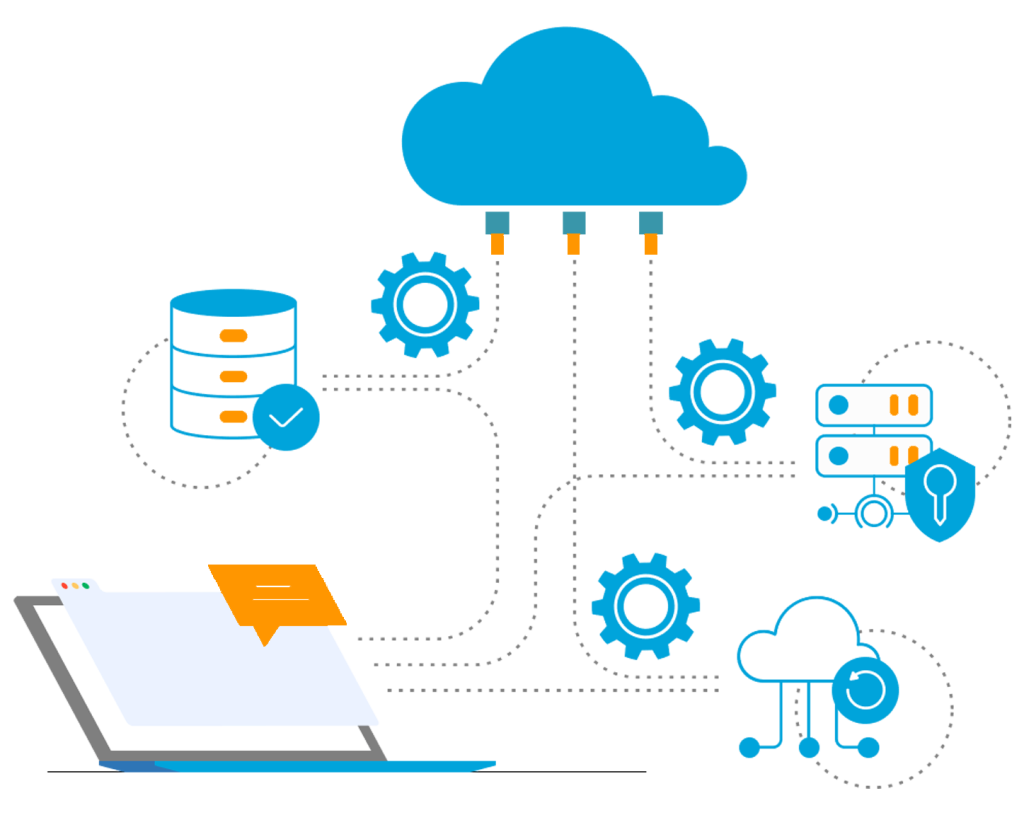

Integrating Microsoft Azure with Power BI creates a powerful ecosystem for data-driven decision-making, enabling businesses to harness cloud-scale data storage and advanced analytics with seamless visualization and reporting. Azure offers a broad suite of services, including databases, data lakes, machine learning, and AI tools, which, when combined with Power BI, allow for real-time insights and interactive dashboards. This integration empowers organizations to connect massive datasets, streamline data workflows, and turn complex data into actionable intelligence.

Azure services such as Azure SQL Database, Azure Data Lake, Azure Synapse Analytics, and Azure Machine Learning are natively compatible with Power BI, facilitating efficient data ingestion, processing, and analysis. Businesses can set up automated data pipelines that transform and prepare data within Azure before pushing it to Power BI for rich visualization. Security, compliance, and scalability are at the forefront, ensuring sensitive data is handled securely across the cloud infrastructure while maintaining high performance.

By leveraging this integration, organizations gain the ability to monitor business metrics in real time, forecast trends using machine learning models, and collaborate on insights through Power BI’s sharing capabilities. It simplifies data access across departments, enabling teams to make faster, smarter decisions. The synergy between Azure’s cloud capabilities and Power BI’s visualization tools provides a comprehensive solution for modern data analytics needs, supporting digital transformation and competitive advantage.

Key Features:

Seamless Data Connectivity:

Automated Data Pipelines:

Real-Time Dashboards:

AI & Machine Learning Insights:

Scalability & Performance:

Security & Compliance:

Custom Visualizations:

Collaboration & Sharing:

Scheduled Refresh & Alerts:

Cross-Platform Access:

Features of Custom Data Engineering:

Custom Data Engineering focuses on building tailored data pipelines and infrastructure to meet the unique needs of an organization. A fundamental feature is the design and construction of end-to-end data pipelines. This involves defining how data is ingested from various sources (databases, APIs, streaming platforms, etc.), transformed and cleaned according to specific business logic, and ultimately loaded into target systems like data warehouses, data lakes, or analytical databases. These pipelines are designed for efficiency, scalability, and reliability, ensuring a consistent flow of high-quality data.

Another key aspect is data integration from diverse sources. Custom solutions are built to handle the complexity of integrating data from disparate systems, often with varying formats, structures, and velocities. This requires expertise in data extraction, transformation, and loading (ETL/ELT) processes, as well as the ability to work with different data storage technologies. Furthermore, custom data engineering emphasizes data quality and governance. This involves implementing processes and tools for data validation, cleansing, and standardization to ensure accuracy and consistency. It also includes establishing data governance frameworks to manage data access, security, and compliance.

Finally, performance optimization and scalability are critical features of custom data engineering. Solutions are designed to handle large volumes of data and high processing demands, often leveraging distributed computing frameworks and cloud-based infrastructure. Engineers focus on optimizing query performance, data storage strategies, and pipeline efficiency to ensure timely and cost-effective data processing. This often involves selecting the right technologies and architectures based on the specific data characteristics and analytical requirements of the organization.